CHAMPAGNE: Learning Real-world Conversation from Large-Scale Web Videos (ICCV 23)

March 20, 2023

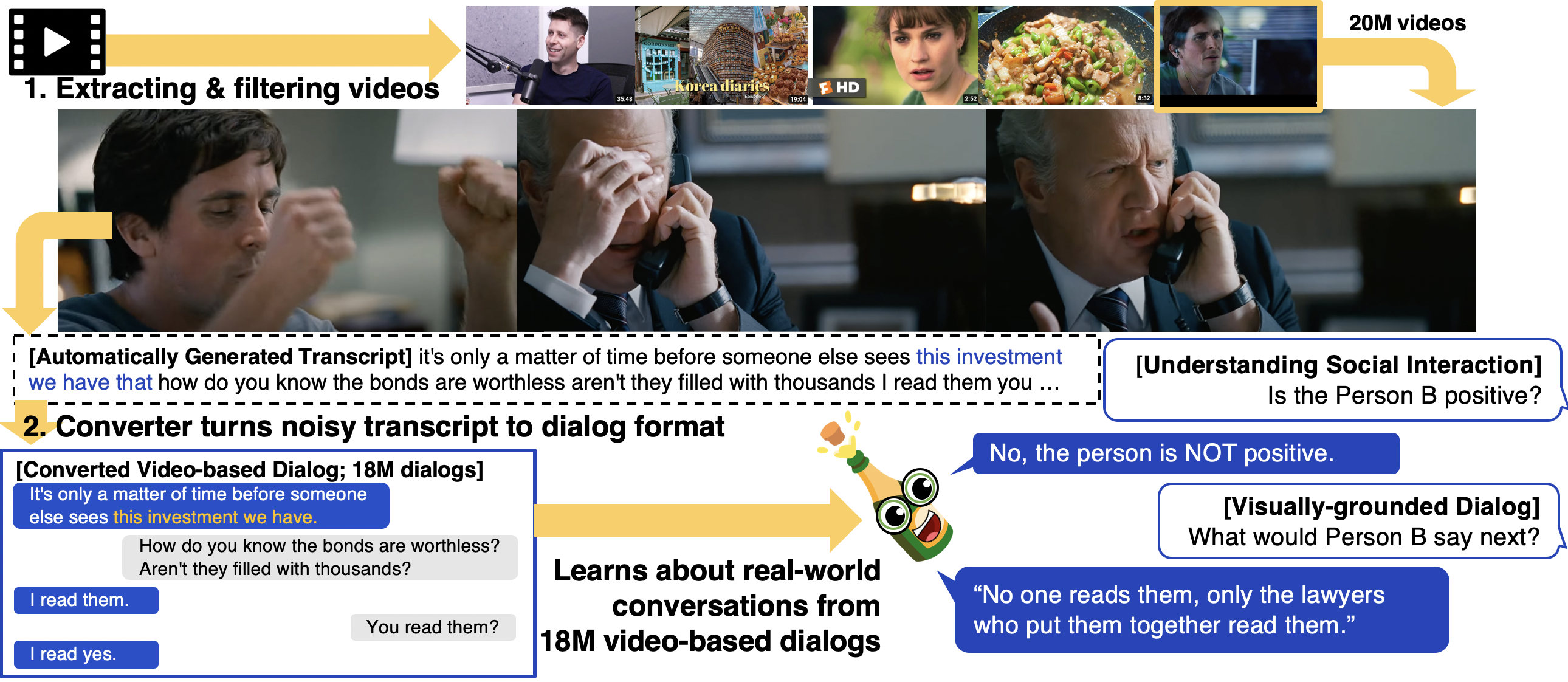

We provide a large-scale dataset of 18M video-based dialogues, and introduce a generative model of conversations that learns from video-based dialogues so that it account for visual contexts.

Visual information is central to conversation: body gestures and facial expressions, for example, contribute to meaning that transcends words alone. To date, however, most neural conversational models are limited to just text. We introduce CHAMPAGNE, a generative model of conversations that can account for visual contexts. To train CHAMPAGNE, we collect and release YTD-18M, a large-scale corpus of 18M video-based dialogues. YTD-18M is constructed from web videos: crucial to our data collection pipeline is a pretrained language model that converts error-prone automatic transcripts to a cleaner dialogue format while maintaining meaning.

Human evaluation reveals that YTD-18M is more sensible and specific than prior resources (MMDialog, 1M dialogues), while maintaining visual-groundedness. Experiments demonstrate that 1) CHAMPAGNE learns to conduct conversation from YTD-18M; and 2) when fine-tuned, it achieves state-of-the-art results on four vision-language tasks focused on real-world conversations.

Codes

Official codebase for CHAMPAGNE on Github — including model training code (based on JAX).

Dataset

The dataset is composed of four subsets, and whether the data is safe or not is determined by Rewire API. GPT-3 generated YTD is used for training converter model, and the rest of the data (YTD-18M) is generated by the converter model and used for training CHAMPAGNE.

- GPT-3 (text-davinci-003) generated YTD (40K examples)

- Safe:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/data/ytd_gpt3_safe_json.tar.gz - Unsafe:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/data/ytd_gpt3_unsafe_json.tar.gz

- Safe:

- YTD-18M (18M examples)

- Safe:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/data/ytd_converter_safe_json.tar.gz - Unsafe:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/data/ytd_converter_unsafe_json.tar.gz

- Safe:

Examples will look like:

{'youtube_id': 'iaEcUB1aMMI',

'start_sec': 120.0,

'end_sec': 180.0,

'transcription': "now what we're going to do we're going to go ahead we're going to install this camshaft now I want to do this now because I can control both sides of the camshaft as I go through the bearings you could do this with just the front of the camshaft but it is a little bit more difficult so I'm just to send it right now well I've got this little bit of space where I can work now that camshaft is installed i'm going to go aheadand i'm going to put some assembly lube on all of these camshaft loads now the camshaft",

'dialogue': ['Now what are we going to do?',

"We're going to go ahead and install this camshaft. Now I want to do this now because I can control both sides of the camshaft as I go through the bearings. You could do this with just the front of the camshaft, but it is a little bit more difficult so I'm just sending it right now.",

'Okay.']}

To protecting user privacy, we are releasing the text scripts of the videos, but not the videos themselves. You can use codes to download and preprocess the videos.

Model Weights

We release three CHAMPAGNE model checkpoints, which are trained on T5X framework using TPU.

- Base:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/models/base~ytd18M~span.tar.gz - Large:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/models/large~ytd18M~span.tar.gz - XL:

https://storage.googleapis.com/ai2-mosaic-public/projects/champagne/models/xl~ytd18M~span.tar.gz

Citation

If the paper inspires you, please cite us:

@article{han2023champagne,

title={CHAMPAGNE: Learning Real-world Conversation from Large-Scale Web Videos},

author={Han, Seungju and Hessel, Jack and Dziri, Nouha and Choi, Yejin and Yu, Youngjae},

journal={arXiv preprint arXiv:2303.09713},

year={2023}

}

Authors

Seungju Han1, Jack Hessel2, Nouha Dziri2, Yejin Choi2, 3, Youngjae Yu2, 4

Seoul National University1, Allen Institute for AI2, University of Washington3, Yonsei University4